This article give a brief overview about some basic CacheManager features and insights how certain things work and how to configure them.

Strongly Typed Cachelink

The main interface consumers of this library will primarily work with is ICacheManager<T> (implemented by BaseCacheManager<T>).

The interface is generic where the generic type defines the type of the cache value.

Strongly typed caching brings many advantages over a object cache.

That being said, T can also be set to object if you prefer to throw anything into the same CacheManager instance.

If you want to cache many different types in a strongly typed manner, don't worry, you do not have to configure each and every instance. The CacheManager configuration can be shared and re-used by all instances if you want. This also works well with DI for example.

If the same configuration is re-used, CacheManager will also take care of instantiating only the resources needed. For example, a Redis client/connection will be instantiated only once for all CacheManager instances using the same Redis configuration.

Standard Cache Operationslink

CacheManager provides some basic methods like Get, Put, Add, Remove and Clear for example, to operate on the cache.

The difference between Add and Put is that Add creates new keys only and will return false (and do nothing) if the key already exists; while Put will just override any existing value if the key already exists.

cache.Add("key", "value");

var value = cache.Get("key");

cache.Remove("key");

cache.Clear();

Cache Regionslink

CacheManager supports a construct called regions which can be used to control a set of cache keys. The method ClearRegion can be used to remove all keys within one region for example. The implementation of regions depends on the cache vendor, but in most cases, this will just prefix the cached key with the region name.

All methods on ICacheManager have overloads for optionally define a region.

cache.Add("key", "value", "region");

var value = cache.Get("key", "region");

cache.Remove("key", "region");

Cache Handleslink

One of the main feature of CacheManager is handling multiple cache layers.

Layers can be added to the CacheManager instance by adding so called cache handles to it. There are different cache handles for each supported cache vendor, each NuGet package for e.g. Redis contains a cache vendor specific implementation of the cache handle.

To configure and add cache handles by code call the WithXYZHandle method of the ConfigurationBuilder.

The base WithHandle method is usually used internally only, or you can use it if you implement your own, custom cache handle.

Every CacheManager NuGet package which contains a cache handle has extension methods to add the vendor specific layer to the configuration and often also has vendor specific overloads to configure that cache instance.

Example:

var cache = CacheFactory.Build<string>("myCacheName", settings =>

{

settings

.WithSystemRuntimeCacheHandle("handle1");

});

Adding multiple cache handles looks pretty much the same:

var cache = CacheFactory.Build<string>("myCacheName", settings =>

{

settings

.WithSystemRuntimeCacheHandle("handle1")

.And

.WithRedisCacheHandle("redis");

});

Cache Item handlinglink

The configured cache handles will be stored as a simple list. But it is important to know that the order of how the cache handles are added to the configuration matters!

When retrieving an item, CacheManager will iterate over all cache handles and returns the item from the first cache handle containing the key.

With the exception of Add, all other cache operations like Put, Update, Remove, Clear and ClearRegion will be executed on all configured cache handles. This is necessary because in general we want to have all layers of our cache in sync.

Add is an exception in that regards as Add should only work if the key doesn't exist already. Now, if there are multiple layers of cache and let's say one out of two layers does not contain the key, it gets hard to decide what to do.

That's why Add will always try to add the key to the lowest/last cache level only! And eventually even remove the key from other layers above, so that the next Get hits the newly added key.

Cache Update Modelink

Cache update mode handles synchronization across multiple cache handles configured for the CacheManager instance.

Let's say we have two cache handles configured, and the Get operation finds the Key in the second cache handle, CacheManager will add the key to the first handle.

The next time a Get is executed on the same Key, the item will be found in the first handle.

The same applies to cache evictions triggered by the underlying cache vendor.

There are 2 modes for Cache Manager to handle this, defined by CacheUpdateMode:

- None - setting

CacheUpdateModetoNonewill let CacheManager ignore updates or deletes and not synchronize the different layers. - Up - instructs the Cache Manager to update cache handles "above" the one the cache item was found in (order of the cache handles matter)

Cache Expirationlink

Setting an expiration timeout for cache items is a common thing when working with caching because we might not want the cache item to be stored in memory for ever for example.

With Cache Manager, it is possible to control the cache expiration per cache handle and optionally override it per cache item.

As seen in the examples above, setting the expiration always has two parts, the ExpirationMode and the timeout (TimeSpan), e.g. .WithExpiration(ExpirationMode.Sliding, TimeSpan.FromSeconds(10)).

Expiration Modeslink

The ExpirationMode has three options:

- None will instruct the Cache Manager to not set any expiration.

- Absolute will set an absolute date on which the cache item should expire. The expiration value will be an absolute date. If a

TimeSpanis used to configure the expiration, theCreatedDateof the cache item will be used as a baseline. - Sliding will instruct CacheManager handle a sliding window expiration. Every time a key is accessed, the expiration date will extend by the

TimeSpanconfigured.

Important: Certain combinations of multiple layers of cache and expiration might not work well together. E.g. having a sliding expiration on the second level while the first level doesn't have any expiration. This can result in the expiration not being updated on the second layer if the key gets hit through the first layer all the time, which leads to the key eventually expire on the second layer and eventually remove it from the first layer, too (via update mode).

Expiration per Itemlink

An alternative to the "per handle" expiration configuration is to define an expiration for a CacheItem explicitly.

To do so, use the CacheItem object and the corresponding overloads on ICacheManager instead of key value.

Important Setting the expiration via

CacheItemwill override/ignore any previously configured expiration on cache handles.

var item = new CacheItem<string>(

"key", "value", ExpirationMode.Absolute, TimeSpan.FromMinutes(10));

cache.Add(item);

To retrieve a cache item and change the expiration, use the GetCacheItem method for example.

var item = cache.GetCacheItem("key");;

cache.Put(item.WithExpiration(ExpirationMode.Sliding, TimeSpan.FromMinutes(15)));

Cache Eventslink

The ICacheManager interface defines several events which get triggered by certain cache operations by the cache vendor or other event systems.

To subscribe to an event, simply add an event listener:

cache.OnAdd += (sender, args) => ...;

The following cache operation related events are available OnAdd, OnClear, OnClearRegion, OnGet, OnPut, OnRemove and OnUpdate. Those events are triggered globally only once per operation and not per cache layer.

OnRemoveByHandle Eventlink

The OnRemoveByHandle event might get triggered if the underlying cache vendor decides to remove a key (if the key expires for example).

This event triggers per cache layer and the returned arguments object contains an integer value for the Level (starting at 1) the event was triggered at.

The arguments might also contain the removed value, if the cache vendor supports this feature.

If you use Redis cache, you can enable KeyspaceNotifications on the Redis server and in CacheManager.

This allows CacheManager to subscribe to the event system of Redis and listen for eviction events or manual deletes for example (key space notifications are disabled per default).

Events and the Backplanelink

If the backplane feature is enabled, events are also triggered by backplane messages. The backplane will synchronize the cache, in case a key got updated for example, and then trigger the event.

To determine the difference, all event arguments have an Origin flag which will be set to remote in case the event got triggered by another instance of the cache through the backplane.

Statistics and Counterslink

CacheManager optionally can collect statistical information about what is going on in each cache layer and can also update performance counters. Both features are disabled by default.

Both can be enabled or disabled per cache handle, for example:

var cache = CacheFactory.Build("cacheName", settings => settings

.WithSystemRuntimeCacheHandle("handleName")

.EnableStatistics()

.EnablePerformanceCounters());

Note Disabling statistics will also disable performance counters and enabling performance counters will enable statistics. Collecting the numbers and updating performance counters can cause a slight performance decrease.

Statisticslink

Statistics are a collection of numbers identified via CacheStatsCounterType enum which stores the following numbers:

- cache hits

- cache misses

- number of items in the cache

- number of

Removecalls - number of

Addcalls - number of

Putcalls - number of

Getcalls - number of

Clearcalls - number of

ClearRegioncalls

Statistics can be retrieved for each handle by calling handle.GetStatistic(CacheStatsCounterType).

Example:

foreach (var handle in cache.CacheHandles)

{

var stats = handle.Stats;

Console.WriteLine(string.Format(

"Items: {0}, Hits: {1}, Miss: {2}, Remove: {3}, ClearRegion: {4}, Clear: {5}, Adds: {6}, Puts: {7}, Gets: {8}",

stats.GetStatistic(CacheStatsCounterType.Items),

stats.GetStatistic(CacheStatsCounterType.Hits),

stats.GetStatistic(CacheStatsCounterType.Misses),

stats.GetStatistic(CacheStatsCounterType.RemoveCalls),

stats.GetStatistic(CacheStatsCounterType.ClearRegionCalls),

stats.GetStatistic(CacheStatsCounterType.ClearCalls),

stats.GetStatistic(CacheStatsCounterType.AddCalls),

stats.GetStatistic(CacheStatsCounterType.PutCalls),

stats.GetStatistic(CacheStatsCounterType.GetCalls)

));

}

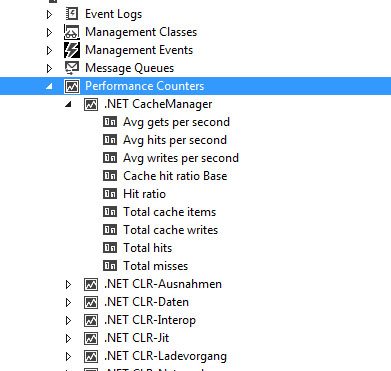

Performance Counterslink

If performance counters are enabled, Cache Manager will try to create a new PerformanceCounterCategory named ".Net CacheManager" with several counters below.

Server Explorer:

Note The creation of performance counter categories might fail because your application might run in a security context which doesn't allow the creation. In this case Cache Manager will silently disable performance counters.

To see performance counters in action, run "perfmon.exe", select "Performance Monitor" and click the green plus sign on the toolbar. Now find ".Net Cache Manager" in the list (should be at the top) and select the instances and counters you want to track.

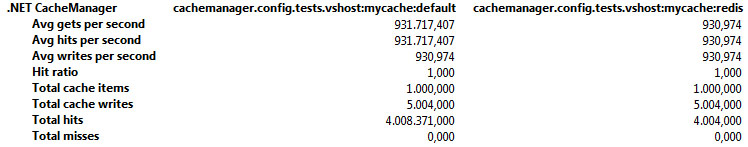

The result should look similar to this:

The instance name displayed in Performance Monitor is the host name of your application combined with the cache and cache handle's name.

System.Web.OutputCachelink

The CacheManager.Web Nuget package contains an implementation for System.Web.OutputCache which uses the cache manager to store the page results, if the OutputCache is configured to store it on the server.

Configuration of the OutputCache can be done via web.config:

<system.web>

<caching>

<outputCache defaultProvider="CacheManagerOutputCacheProvider">

<providers>

<add cacheName="websiteCache" name="CacheManagerOutputCacheProvider" type="CacheManager.Web.CacheManagerOutputCacheProvider, CacheManager.Web" />

</providers>

</outputCache>

</caching>

</system.web>

The cacheName attribute within the add tag is important. This will let CacheManager know which cache configuration to use. The configuration must also be provided via web.config, configuration by code is not supported!